Intuition-1 mission

Intuition-1 is a satellite mission designed to observe the Earth using a hyperspectral instrument and an on-board computing unit capable of processing data using neural networks (artificial intelligence) in orbit. It will be the satellite with processing power allowing segmentation of hyperspectral images in orbit. Segmentation means the ability to automatically determine the features of images – that is, to search for similar patterns. Such patterns may be e.g. diseases of crops or climate anomalies such as droughts.

Intuition-1 is a technology demonstrator that aims to prove that the use of artificial intelligence to process hyperspectral data already in orbit positively affects the efficiency of the remote sensing process and that hyperspectral instruments based on KP Labs optics can observe phenomena so far impossible to detect by standard panchromatic, multispectral or radar satellites.

The satellite is to have a mass of 12 kg at most and dimensions not bigger than 6U (30x20x10 cm), so it will belong to the group of so-called nanosatellites.

INTUITION-1 INFORMATION

Partners

Future Processing and FP Instruments

Duration

01.2018-12.2023. The mission is planned to start at 2023 and to be fully operational in 2024

Financed by

The National Center for Research and Development of Poland

The main objective of the project is to create a system for automatic processing of hyperspectral data on-board the satellite, which will independently select information and first send to Earth those data that are of key importance to the user. This will significantly reduce the volume of data sent to the ground station. The algorithms used can also detect interesting changes in the observed areas and inform the user about them, which will significantly shorten the reaction time to the observed phenomena and will make the user receive processed data (with specific conclusions) immediately.

Example

The size of the transmitted data will be reduced to the necessary minimum (for the recording of imaging results of the area of 40 km x 40 km with the spatial resolution of 25 m/pixel for spectral measurements using 150 bands/wavelength ranges, approx. 7 GB of data – after processing we will obtain at least a 100-fold reduction in the amount of data while retaining important information).

This will have an impact on significantly shortening the time from the moment of occurrence of a given phenomenon in the observed area to the moment of obtaining information about this event.

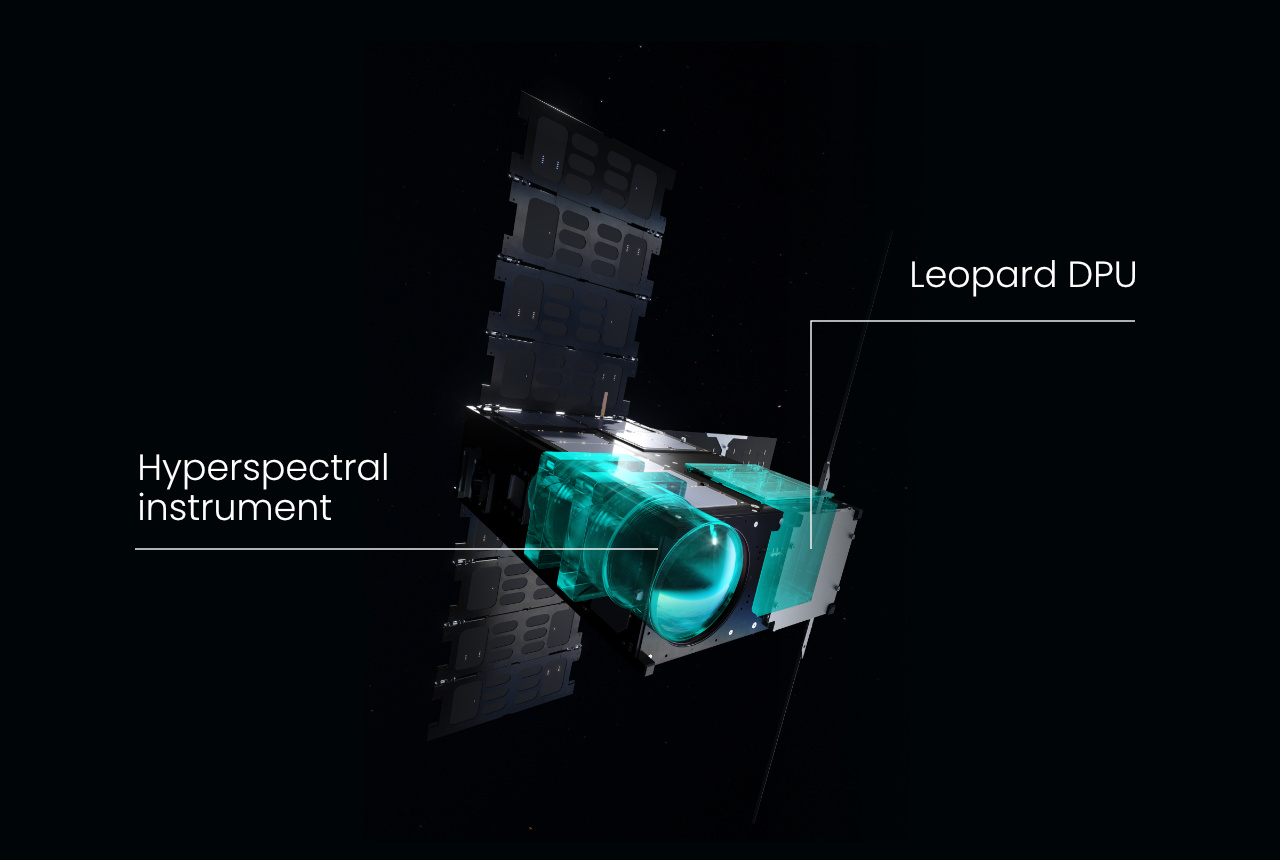

Intuition-1

Hyperspectral instrument

the light wavelength between 465-940 nm, divided into 192 channels will allow a detailed analysis of the research area

Leopard DPU

on-board image and data processing, and reconfiguration of the same satellite for a new purpose

AI-powered algorithms

optical data acquisition, preparation and manipulation, classification, segmentation, compression, and much more

The hyperspectral camera

The hyperspectral camera will use up to 192 spectral bands in the range of 465 nm – 940 nm. Every scene captured by the camera will contain an image divided into multiple frames. Each frame will register a different spectrum range. This means that to obtain a hyperspectral image of an exact area, the frames from all spectral ranges will have to be assembled and processed by the data processing unit – Leopard – which will also store images in non-volatile memory.

Leopard

Leopard DPU on-board the Intuition-1 will enable segmentation and classification of hyperspectral imagery right in Earth’s orbit.

Thanks to in-orbit processing of the collected imagery, at least 100 times less data must be transferred to the ground station. The end-users will thus have lower access time to any information gathered by the satellite that they find relevant. Images will be captured daily, which will allow for continuous monitoring during e.g. a flood.

Intended on-board operations

-

Pre-processing – geometric error correction arising from light refraction in the Earth’s atmosphere and optics system, optics system vibrations, radial distortion, parallax, scattering, sunspots, solar irradiance, the impurity of the camera lens focus.

-

Applying a convolutional neural network to analyse a selected area and detect monitored events and materials.

Use of the data collected

Agriculture

land coverage classification, crop forecasting, crop maps, soil maps, plant disease detection, biomass monitoring, weed mapping)

Forestry

forest classification, identifying spe-cies and the condition of forests, forestation planning

Environmental protection

water and soil pollu-tion maps, land development management and analysis